I’ll admit, using AI as a tool to teach me more about AI throws me off, but it is the expert on itself.

I started my coursework by switching to ‘Study’ mode and telling ChatGPT to use the curriculum that it had created and walk me through Week 1, which it titled “Introduction to Prompt Engineering & the AI Landscape,” a lesson heading that fits right in with my actual college courses. It walked me through the basics of prompt engineering, giving me questions or a ‘Try This!’ at the end of each section (which I definitely did, if the professor asks), and described how you can use the same prompt across different models and get different results, just based on what audience each model is tailored to.

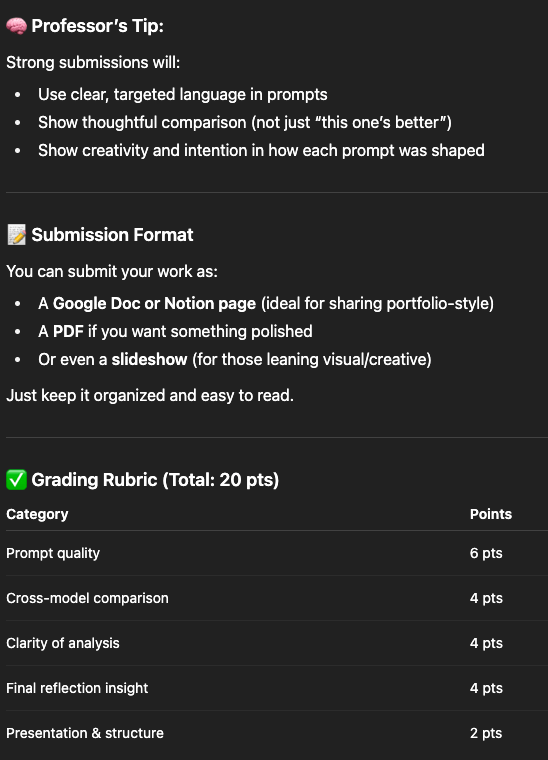

After the quick lesson, it gave me a project, which I asked it to rephrase as if it was a professor who would be handing out a grade. It listed three parts:

1. Prompt Design + Testing: Write three original prompts – one creative, one business, and one technical – and run each across at least two AI tools

2. Analysis: For each prompt, describe the goal and outputs from the tools, then compare the outputs with each other and the original goal

3. Reflection: Write a short essay about what I learned and how I can use it

It even gave some tips from a professor on what a strong submission would look like, then capped it all off with a rubric, assigning points to prompt quality, comparison, structure, and more.

Side note – it assigned a due date for the end of Week 1 and said that it would check in periodically as that approached. I completed it quickly, but now I’m wondering if it actually would have checked in on my progress. I’ll test that next week.

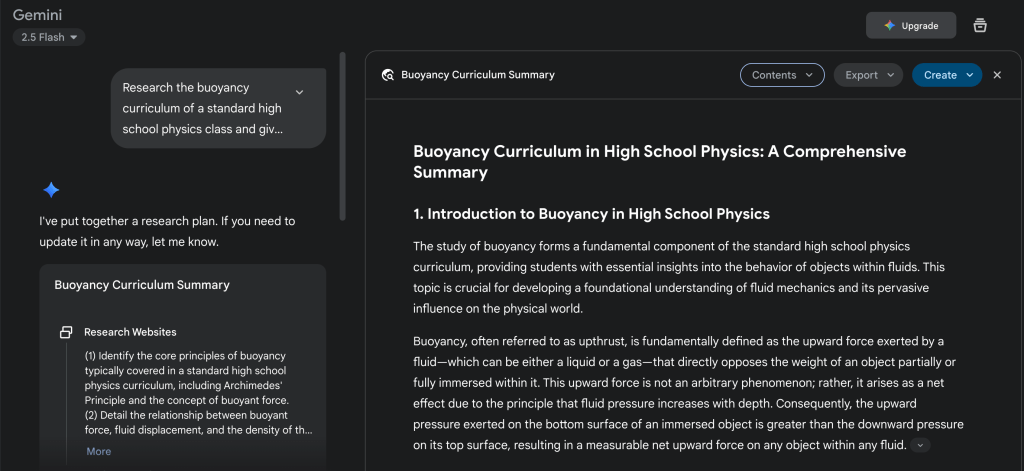

I decided to use ChatGPT – a familiar model – and Gemini – an unfamiliar one – and began my work. For the creative prompt, I asked the models to write a poem about watching the sunset as a hopeful young adult. My business prompt had them write a marketing email advertising a new hoodie line to veteran customers. And finally, the technical prompt, which was the most useful, was me asking the models to summarize a high-school physics lesson on buoyancy for a cramming student. The variety of topics let each model highlight its strengths.

With that in mind, I got to work, using the first prompts that came to mind. As a result, I was moved by artificially artistic poetry, persuasion to pay more than usual for a better hoodie, and stayed up late cramming for tomorrow’s physics exam.

Overall, ChatGPT was more concise than Gemini, giving the quick and simple answers that a modern teenager is most likely looking for. Throughout all three prompts, ChatGPT gave shorter responses that were more to-the-point than Gemini, showing that each model had its own uses: ChatGPT for the everyday user, and Gemini for more in-depth creation or research.

The two models primarily differed from each other in the physics summary prompt. ChatGPT gave a lesson that went over the content quickly and added practice questions. Gemini gave a full research study complete with a table on misconceptions and no practice problems. At first glance, ChatGPT would be declared the clear winner, but I realized that this is most likely user error on my end, where I switched Gemini to its ‘Research’ mode, overcomplicating the response from my original goal.

After one lesson from AI about itself, where does that leave me? Well, I learned that each model has its unique strengths and knowing what your intention or audience is can help you decide what model to use. The biggest takeaway is that being more specific from the beginning is always helpful when working with AI, regardless of the model. Specificity avoids confusion and frustration and saves time later on, even if it requires a couple more seconds on the front-end. It also reaffirmed that writing prompts isn’t just Googling – it takes work before, during, and after the model responds.

Next week takes this a step further, looking at what separates basic prompting techniques from advanced and how to use that in a variety of settings.

Final Score: 19.5/20, half a point marked off on the clarity of my analysis.

I think the professor has something against giving an A+.

If you want to see my full work, including the original prompts, raw results, and final essay, I’ve linked the Google Doc here.

Leave a comment